Uniswap v3 liquidity analysis

In this notebook we will show how to download liquidity events from Uniswap V3 to your computer as CSV files and use them to construct and analyse liquidity in each pool.

Note: This example is old. There are now better, newer, APIs for writing the event reader loop for JSON-RPC source. Check tutorials section in the documentation for more examples. This example is still ok starting point if you are new into blockchains.

For more background information, see this blog post about the topic

You need to understand Jupyter Notebook and Pandas basics

You need to understand Ethereum and Web3.py basics

You will need to have Ethereum API node and its JSON-RPC URL in order to pull out the data from Ethereum blockchain. The notebook will interactively ask you for your API key.

Download the raw data from Ethereum blockchain

For simplicity, you can sign up for free access to an Ethereum node for example at Infura, however we recommend you to run your own Ethereum node

[11]:

import os

from web3 import Web3, HTTPProvider

from eth_defi.utils import get_url_domain

from eth_defi.provider.multi_provider import create_multi_provider_web3

# Get your node JSON-RPC URL

json_rpc_url = os.environ.get("JSON_RPC_ETHEREUM") # You can pass as env var if running from command line using ipython

if not json_rpc_url:

json_rpc_url = input("Enter your Ethereum mainnet JSON-RPC URL here: ")

# Create a fault tolerant/auto recovery Web3 RPC backend.

# This is especially important if you are using RPC multiplexing service like dRPC or Pokt

# See "MEV protection and multiple JSON-RPCs configuration" tutorial for more information.

web3 = create_multi_provider_web3(json_rpc_url.strip())

print("Using RPC server", get_url_domain(json_rpc_url)) # Don't display API key as the part of URL path or query string

Using RPC server lb.drpc.org

As an example, here we download raw liquidity events from first 100000 blocks after Uniswap V3 factory is created, the events will be stored in several CSV files at /tmp folder.

Depends on your internet connection and latency to the Ethereum node, the scan might take hours. However it can resume in case there is a crash, as we save the last scanned block in a JSON state file.

[12]:

from eth_defi.uniswap_v3.constants import UNISWAP_V3_FACTORY_CREATED_AT_BLOCK

from eth_defi.uniswap_v3.events import fetch_events_to_csv

from eth_defi.event_reader.json_state import JSONFileScanState

# Take 100,000 blocks snapshot from the time Uniswap v3 was deployed

start_block = UNISWAP_V3_FACTORY_CREATED_AT_BLOCK

end_block = UNISWAP_V3_FACTORY_CREATED_AT_BLOCK + 100_000

# Stores the last block number of event data we store

state = JSONFileScanState("/tmp/uniswap-v3-liquidity-scan.json")

fetch_events_to_csv(json_rpc_url, state, start_block=start_block, end_block=end_block)

print("CSV files generated")

Restored previous scan state, data until block 12,469,621, we are skipping 100,000 blocks out of 100,000 total

Saving Uniswap v3 data for block range 12,469,621 - 12,469,621

Wrote 0 PoolCreated events to /tmp/uniswap-v3-poolcreated.csv

Wrote 1 Swap events to /tmp/uniswap-v3-swap.csv

Wrote 0 Mint events to /tmp/uniswap-v3-mint.csv

Wrote 0 Burn events to /tmp/uniswap-v3-burn.csv

CSV files generated

Construct intermediate data

A single raw liquidity event only tells us what happened to specific ticks in a specific pool. To be able to know how much liquidity is in each tick, we first need to construct intermediate data (we call them tick delta events) based on mint and burn events

[13]:

from eth_defi.uniswap_v3.liquidity import create_tick_delta_csv

tick_delta_csv = create_tick_delta_csv("/tmp/uniswap-v3-mint.csv", "/tmp/uniswap-v3-burn.csv")

Then we can use these delta events to aggregate into each tick liquidity

[14]:

from eth_defi.uniswap_v3.liquidity import create_tick_csv

tick_csv = create_tick_csv(tick_delta_csv)

Create a dataframe we can use for further analysis

[15]:

import pandas as pd

tick_df = pd.read_csv(tick_csv)

tick_df

[15]:

| Unnamed: 0 | pool_contract_address | tick_id | liquidity_gross_delta | liquidity_net_delta | |

|---|---|---|---|---|---|

| 0 | 0 | 0x000ea4a83acefdd62b1b43e9ccc281f442651520 | -82140 | 2060173899059917749827 | 2060173899059917749827 |

| 1 | 1 | 0x000ea4a83acefdd62b1b43e9ccc281f442651520 | -82080 | 5508168315607950206 | 5508168315607950206 |

| 2 | 2 | 0x000ea4a83acefdd62b1b43e9ccc281f442651520 | -82020 | 2060173899059917749827 | -2060173899059917749827 |

| 3 | 3 | 0x000ea4a83acefdd62b1b43e9ccc281f442651520 | -81960 | 5508168315607950206 | -5508168315607950206 |

| 4 | 4 | 0x00323a300261042dd5d697e3f92a06279cc7d15b | 62150 | 81896317601094011387162 | 81896317601094011387162 |

| ... | ... | ... | ... | ... | ... |

| 13645 | 13645 | 0xff90fb880da9738b2044b243daf8172bfe413b5c | -123120 | 0 | 0 |

| 13646 | 13646 | 0xff90fb880da9738b2044b243daf8172bfe413b5c | -122040 | 349127115575225137449 | 349127115575225137449 |

| 13647 | 13647 | 0xff90fb880da9738b2044b243daf8172bfe413b5c | -115140 | 0 | 0 |

| 13648 | 13648 | 0xff90fb880da9738b2044b243daf8172bfe413b5c | -111060 | 349127115575225137449 | -349127115575225137449 |

| 13649 | 13649 | 0xff90fb880da9738b2044b243daf8172bfe413b5c | 0 | 3965269664593024571814 | -3965269664593024571814 |

13650 rows × 5 columns

Liquidity analysis example

Choose a random pool from the tick dataframe, for example: UNI/ETH 0.3%

[16]:

pool_address = "0x1d42064fc4beb5f8aaf85f4617ae8b3b5b8bd801"

Extract only the ticks relate to the pool we chose from the dataframe

[17]:

df = tick_df[tick_df.pool_contract_address == pool_address].sort_values(by="tick_id")

df.index = df.tick_id

df

[17]:

| Unnamed: 0 | pool_contract_address | tick_id | liquidity_gross_delta | liquidity_net_delta | |

|---|---|---|---|---|---|

| tick_id | |||||

| -345420 | 1352 | 0x1d42064fc4beb5f8aaf85f4617ae8b3b5b8bd801 | -345420 | 0 | 0 |

| -253260 | 1353 | 0x1d42064fc4beb5f8aaf85f4617ae8b3b5b8bd801 | -253260 | 9540245628022539790 | 9540245628022539790 |

| -242640 | 1354 | 0x1d42064fc4beb5f8aaf85f4617ae8b3b5b8bd801 | -242640 | 2032338076661281779 | 2032338076661281779 |

| -207240 | 1355 | 0x1d42064fc4beb5f8aaf85f4617ae8b3b5b8bd801 | -207240 | 911269521453131094 | 911269521453131094 |

| -206220 | 1356 | 0x1d42064fc4beb5f8aaf85f4617ae8b3b5b8bd801 | -206220 | 1409245106840294 | 1409245106840294 |

| ... | ... | ... | ... | ... | ... |

| 99060 | 1881 | 0x1d42064fc4beb5f8aaf85f4617ae8b3b5b8bd801 | 99060 | 0 | 0 |

| 115140 | 1882 | 0x1d42064fc4beb5f8aaf85f4617ae8b3b5b8bd801 | 115140 | 1077028788640831727 | -1077028788640831727 |

| 131220 | 1883 | 0x1d42064fc4beb5f8aaf85f4617ae8b3b5b8bd801 | 131220 | 10666729901899493 | -10666729901899493 |

| 138180 | 1884 | 0x1d42064fc4beb5f8aaf85f4617ae8b3b5b8bd801 | 138180 | 16150860901245802582 | -16150860901245802582 |

| 391440 | 1885 | 0x1d42064fc4beb5f8aaf85f4617ae8b3b5b8bd801 | 391440 | 9540245628022539790 | -9540245628022539790 |

534 rows × 5 columns

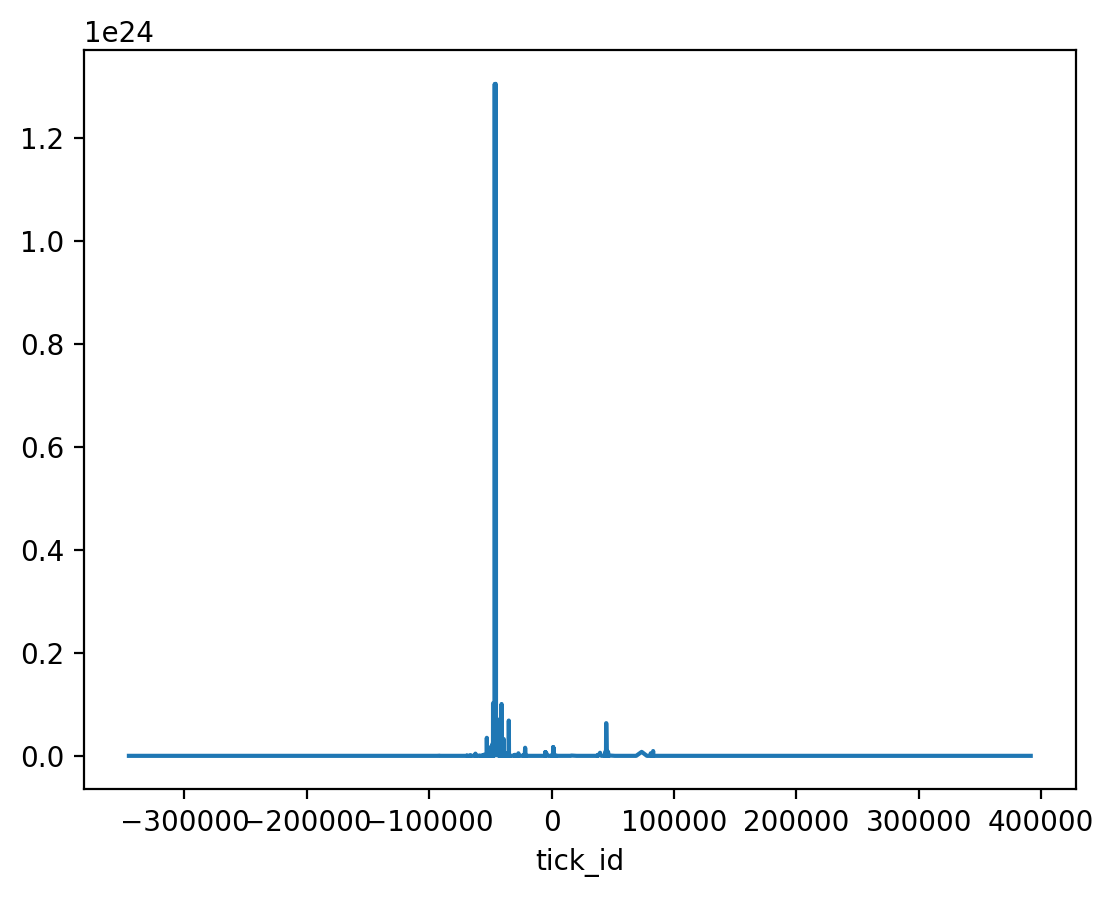

We then can plot the liquidity gross in this pool

[18]:

import matplotlib.pyplot as plt

plt.rcParams["figure.dpi"] = 200

df["liquidity_gross_delta"].astype(float).plot()

[18]:

<Axes: xlabel='tick_id'>

Compare the data with The Graph data

One way to verify whether the data we construct is correct is to compare it with official Uniswap V3 subgraph data.

Note: Uniswap data is no longer available without API key. This section is disabled.

[19]:

tickdelta_df = pd.read_csv(tick_delta_csv)

last_processed_block = tickdelta_df[tickdelta_df.pool_contract_address == pool_address].tail(1).block_number

last_processed_block = int(last_processed_block.values[0])

last_processed_block

[19]:

12469604

Get pool’s state at the same last processed block

[20]:

from eth_defi.uniswap_v3.liquidity import get_pool_state_at_block

uniswap_graph_api_key = os.environ.get("UNISWAP_GRAPH_API_KEY")

if uniswap_graph_api_key:

pool_state = get_pool_state_at_block(pool_address, last_processed_block, api_key=uniswap_graph_api_key)

ticks = pool_state["ticks"]

else:

print("Skipped because no UNISWAP_GRAPH_API_KEY env")

Skipped because no UNISWAP_GRAPH_API_KEY env

Then compare random ticks between our CSV data and the subgraph data

[21]:

import random

if uniswap_graph_api_key:

# get some random ticks from subgraph

for i in range(10):

random_tick = random.choice(ticks)

# get the same tick from dataframe

random_tick_df = df[df.tick_id == int(random_tick["tickIdx"])]

# compare data

assert int(random_tick_df.liquidity_gross_delta.values[0]) == int(random_tick["liquidityGross"])

assert int(random_tick_df.liquidity_net_delta.values[0]) == int(random_tick["liquidityNet"])

else:

print("Skipped because no UNISWAP_GRAPH_API_KEY env")

Skipped because no UNISWAP_GRAPH_API_KEY env